Introduction to Computer History

The history of computers is a fascinating journey that highlights humanity’s relentless quest for innovation and efficiency. Understanding the evolution of computers is essential not only for appreciating the technological advancements that have propelled society forward but also for comprehending the underlying principles that shaped modern computing. By studying computer history, one gains insight into how various inventions, discoveries, and societal needs intersected to forge the complex landscape of today’s technology.

From the earliest mechanical devices to the sophisticated digital systems prevalent today, computers have undergone significant transformations. The timeline begins with primitive counting tools such as the abacus, eventually progressing to the invention of the first programmable machines in the 19th century. These early innovations laid the groundwork for more advanced systems, leading to the development of electronic computers in the 20th century. Each milestone not only marks a technological achievement but also reflects the social conditions and intellectual currents of its time.

The evolution of computers has not merely been a linear path of improvements; it encompasses diverse influences and interdisciplinary advancements. For instance, the transition from vacuum tubes to transistors revolutionized computing, making machines smaller, faster, and more reliable. This shift had profound implications, catalyzing the rise of personal computing and the digital age. Furthermore, milestones such as the introduction of the internet have transformed communication and information dissemination, reshaping global interactions.

Furthermore, understanding computer history aids in recognizing potential future developments. It provides a context for evaluating emerging technologies and their societal impacts. As we delve into the subsequent sections, the timeline will highlight pivotal moments in computer history, elucidating how these events have collectively shaped the world we inhabit today.

Early Computing Concepts (BC – 1000 AD)

The history of computing can be traced back to ancient civilizations, where the initial concepts and instruments emerged that provided the foundation for modern computing. One of the earliest tools utilized for calculation was the abacus, which dates back to around 500 BC in ancient Mesopotamia. This simple counting device involved beads or stones that could be moved along rods, enabling users to perform arithmetic calculations with relative ease. The abacus served not only as a practical tool for merchants and traders but also as a precursor to more complex algorithms that would eventually revolutionize numerical computation.

Additionally, the development of mathematics during this period significantly influenced computing concepts. Ancient Greeks, such as Euclid and Archimedes, contributed foundational theories that are still relevant today, including geometry and principles of numerical understanding. Furthermore, the introduction of the positional decimal number system in ancient India provided a vital advancement, allowing for complex calculations that we now recognize as essential in computer programming and data processing.

Another notable contribution to early computing theories was made by the Chinese, who invented counting rods around the 3rd century BC. These rods were used for calculation and laid the groundwork for future mathematical instruments. Meanwhile, the Islamic Golden Age (8th to 14th century) saw significant developments in algebra and numerical systems, further enhancing mathematical thought. Notably, scholars like Al-Khwarizmi, who is often referred to as the father of algebra, formalized many mathematical principles that later informed the logic behind computer algorithms.

In summary, early computing concepts from BC to 1000 AD reflect a rich tapestry of inventions and mathematical theories. These advancements not only facilitated practical computation but also set the stage for the digital revolution that would unfold centuries later.

The Renaissance and Advancements in Mathematics (1000 – 1400)

The Renaissance, a period spanning from the 14th to the 17th century, marked a significant turning point in the evolution of mathematics and logic. This era ignited a revival of scholarly interest in classical texts, particularly those from ancient Greek and Roman thinkers. Mathematicians and philosophers during this time were instrumental in bridging the gap between ancient knowledge and modern scientific thought, thereby laying the groundwork for the future of computational advancements.

Key figures emerged during the Renaissance, whose contributions would shape mathematical practices for centuries to come. Notably, Leonardo of Pisa, also known as Fibonacci, introduced the Fibonacci sequence through his 1202 work, “Liber Abaci.” This sequence elucidated the relationship between numbers and found application in diverse areas, including nature, art, and finance. Furthermore, Fibonacci’s introduction of the Hindu-Arabic numeral system simplified calculations and paved the way for more sophisticated algorithms later in computational history.

Similarly, the works of philosophers such as René Descartes and George Boole played a crucial role in the progression of logic and mathematical reasoning. Descartes’ Cartesian coordinate system allowed for the visualization of algebraic equations, leading to significant benefits in graphing functions. On the other hand, Boole’s development of Boolean algebra in the 19th century would later become essential in the realm of computer science, proving foundational for digital circuit design and programming.

The synthesis of mathematical ideas during the Renaissance not only stimulated advancements in various scientific fields but also fostered an environment conducive to the exploration of algorithms. The systematic approaches established during this period educated mathematicians on problem-solving strategies, which would eventually culminate in proto-computational theories and practices. The intellectual legacy of the Renaissance undeniably set the stage for the age of computation to follow, influencing the development of algorithms that remain relevant in contemporary technology.

The Birth of Mechanical Calculators (1500 – 1700)

The period from 1500 to 1700 marked a significant turning point in the history of computing with the invention of mechanical calculators. These pioneering devices were designed to assist with mathematical calculations, thereby improving both accuracy and efficiency in mathematical operations. Two notable figures during this period were Blaise Pascal and Gottfried Wilhelm Leibniz, whose innovations laid the groundwork for future computing technologies.

Blaise Pascal, a French mathematician and physicist, invented the Pascaline in 1642, considered one of the first mechanical calculators. This device utilized gears and levers to perform addition and subtraction. The Pascaline worked through a series of rotating dials that represented individual digits; as a user turned the dials, the machine would execute calculations automatically. Although it was not widely adopted due to its complexity and price, the Pascaline demonstrated the potential for machines to assist humans in performing arithmetic operations.

In addition to Pascal’s work, Gottfried Wilhelm Leibniz contributed to mechanical calculators with his invention, the Step Reckoner, in 1672. This machine was more advanced than its predecessor, capable of performing not only addition and subtraction but also multiplication and division. Leibniz’s design incorporated a series of cylinders known as “calculating drums,” which allowed it to execute multiple operations with remarkable efficiency. The Step Reckoner showcased the evolution of computational devices and highlighted the growing need for tools that could simplify complex calculations.

The innovations brought forth by Pascal and Leibniz were instrumental in paving the way for future developments in computing machinery. Their remarkable inventions not only enhanced the capabilities of mathematical calculations but also inspired subsequent generations of inventors, fueling significant advancements in technology and establishing the foundation for the modern computing era.

Computing in the Industrial Age (1800 – 1940)

The period from the early 1800s to 1940 marks a pivotal era in the evolution of computing, characterized by significant technological advancements that laid the groundwork for the digital age. One of the most influential figures during this time was Charles Babbage, often considered the father of the computer. Babbage conceptualized the Analytical Engine in 1837, which encapsulated the fundamental principles of modern computing. This device was designed to perform any calculation through a series of operations and was mechanically powered; it utilized punch cards for input, reminiscent of the later technologies used in computers.

Additionally, Ada Lovelace, an English mathematician, made significant contributions to Babbage’s work. She is often recognized as the first computer programmer for her detailed notes and algorithms intended for the Analytical Engine. Lovelace understood the potential of Babbage’s invention, not merely as a calculating machine but as a device capable of manipulating symbols based on rules, which is foundational to modern computing theory. Her insights foreshadowed the capabilities of computers beyond mere arithmetic computation, encompassing tasks in various domains such as science and art.

The transition from purely mechanical devices to electrically powered computing systems began to take shape during the late 19th and early 20th centuries. Devices like the telegraph and telephone demonstrated the role of electricity in enhancing communication and processing power. These advancements set the stage for subsequent innovations, including the development of the first digital computers during World War II. Succeeding devices incorporated electromechanical components, marking a significant departure from manual operations. This era witnessed a remarkable convergence of engineering, mathematics, and physics, which ultimately converged into the early realms of electronic computing by the late 1930s, paving the way for modern computers as we know them today.

The Electronic Era (1940 – 1960)

The electronic era marked a significant transition in the history of computing, introducing electronic computers that revolutionized the speed and efficiency of calculations. This period, covering the decades from 1940 to 1960, laid the foundation for modern computing through the development of groundbreaking technologies and systems. One of the hallmark achievements of this time was the creation of the Electronic Numerical Integrator and Computer (ENIAC), which became operational in 1945. As one of the earliest electronic general-purpose computers, ENIAC utilized vacuum tubes for its processing, paving the way for future advancements.

The shift from mechanical to electronic computing had profound impacts on computational capabilities. Mechanical computers, which relied on gears, levers, and other physical components, were significantly slower and less efficient than their electronic counterparts. Vacuum tubes, which act as electronic switches, allowed for rapid processing speeds and greater reliability. This innovation not only accelerated calculations but also enabled computers to perform more complex tasks, broadening their applications beyond merely mathematical calculations.

Developments in the electronic era extended beyond ENIAC. The late 1950s saw the introduction of transistors, which would eventually replace vacuum tubes due to their smaller size and enhanced reliability. Transistors consumed less power and generated less heat, presenting an opportunity for the miniaturization of computers. This technological evolution marked the beginning of the second generation of computers, which further utilized these innovative components to improve speed and efficiency.

The work accomplished during this era showcased the potential of electronic computing, laying the groundwork for subsequent technological advances. As computers transitioned into their electronic forms, the groundwork for modern computing was firmly established, prompting remarkable changes in various fields, including science, business, and education.

The Age of Microprocessors and Personal Computers (1960 – 1980)

The period from 1960 to 1980 marked a revolutionary era in the domain of computing, characterized by the development of microprocessors and the advent of personal computers. The introduction of the Intel 4004 in 1971 played a pivotal role in this transformation. As the first commercially available microprocessor, the Intel 4004 provided a compact and efficient means for executing complex calculations, which significantly reduced the size and cost of computing systems. This innovation set the stage for a new wave of technological advancements that would shape the computing landscape.

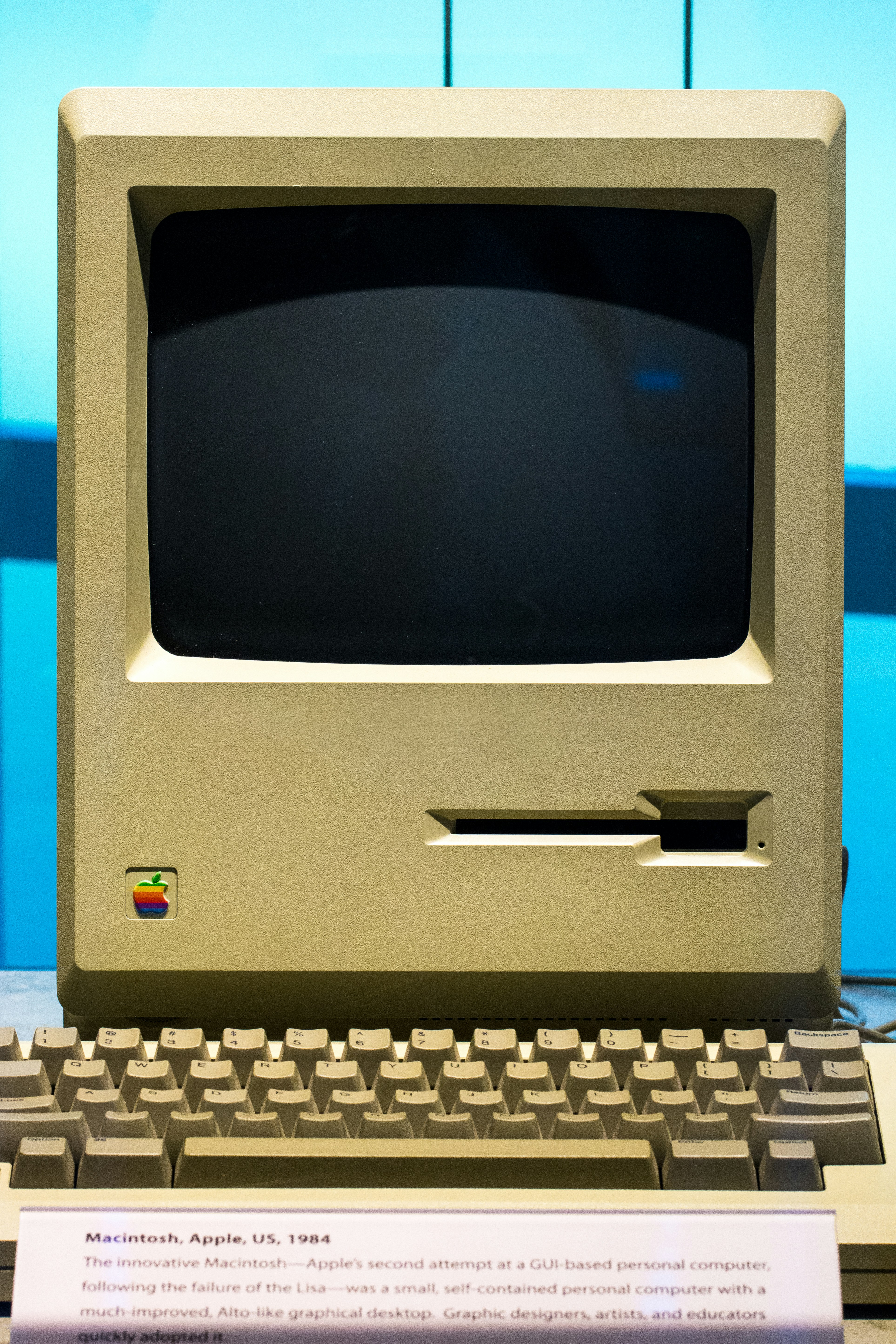

During this timeframe, personal computers began to emerge as accessible alternatives to the large, room-sized mainframes that were prevalent in businesses and institutions. Companies like Apple and IBM entered the market, introducing products such as the Apple I in 1976 and the IBM PC in 1981. These personal computers not only democratized access to technology but also facilitated a growing ecosystem of software development. Individuals and small businesses could now leverage computing power for tasks such as word processing, data management, and educational applications, fundamentally altering how people interacted with technology.

The expansion of programming languages during this era further fueled the growth of personal computing. Languages such as BASIC and Pascal made it easier for programmers to create software, encouraging a new generation of developers to innovate and contribute to the burgeoning field. This period fostered a culture of experimentation and creativity, leading to the establishment of software companies that would play a significant role in the tech industry.

In summary, the age of microprocessors and personal computers from 1960 to 1980 was a transformative period that laid the groundwork for modern computing. Innovations during this time not only enabled the proliferation of technology in everyday life but also fundamentally changed the landscape of business and communication.

The Internet Revolution and Digital Age (1980 – 2000)

The period from 1980 to 2000 marked a significant transformation in global communication and information access, primarily driven by the rapid evolution of the internet. The groundwork for this technological boom was laid in the 1960s; however, it was during the 1980s that the internet began to take a form recognizable today. By 1983, the adoption of the Transmission Control Protocol and Internet Protocol (TCP/IP) set the stage for diverse networks to interconnect seamlessly, forming the foundation of modern internet architecture.

The introduction of the World Wide Web by Tim Berners-Lee in 1991 revolutionized how information was shared and accessed worldwide. Berners-Lee, a British computer scientist, conceptualized a system that allowed people to publish and link information across various platforms using hypertext. This innovation made the internet much more user-friendly and accessible to the average person, leading to an exponential increase in users and content. Following this, the release of the first popular web browser, Mosaic, in 1993, simplified web navigation and played a pivotal role in the web’s rapid expansion.

The late 1990s witnessed the emergence of e-commerce, further reshaping global communication and business practices. Companies like Amazon and eBay entered the market, demonstrating how traditional commerce could be transitioned into the digital world. The advent of secure online payment systems solidified trust in online transactions, creating a thriving ecosystem for digital businesses. Additionally, the introduction of search engines such as Google in 1998 transformed information retrieval, allowing users to find relevant content with unprecedented ease and efficiency.

This period not only changed the landscape of communication but also laid the groundwork for the interconnected digital age we experience today. As network infrastructures improved and web-based technologies flourished, access to information became a fundamental aspect of daily life, reshaping societies globally.

Emerging Technologies and Innovations (2000 – 2020)

The first two decades of the 21st century witnessed an extraordinary evolution in technology, characterized by groundbreaking innovations that transformed the way we interact with the digital world. Mobile computing became a foundational element of everyday life, with the proliferation of smartphones and tablet devices. Apple’s introduction of the iPhone in 2007 revolutionized the mobile landscape, making powerful computing capabilities accessible to the masses. Subsequently, Android emerged as a formidable competitor, fostering a diverse ecosystem of applications and services.

Simultaneously, cloud technology gained prominence, enabling individuals and businesses to store and access data remotely via the internet. Services such as Amazon Web Services (AWS), Google Cloud, and Microsoft Azure provided scalable infrastructure, significantly altering the dynamics of data storage and management. This shift allowed organizations to optimize resources, reduce costs, and enhance collaboration across various platforms.

Moreover, artificial intelligence (AI) evolved rapidly during this period, reshaping multiple sectors such as healthcare, finance, and manufacturing. Technological advancements in machine learning and neural networks led to the development of sophisticated algorithms capable of processing vast amounts of data, thereby facilitating innovations such as voice recognition and autonomous systems. The rise of virtual assistants, like Amazon’s Alexa and Apple’s Siri, exemplified the increasing integration of AI into daily life.

Amid these transformations, big data emerged as a critical focus area, driven by the need to analyze and derive insights from the exponential growth of information generated across industries. The capacity to process and interpret large datasets empowered organizations to make informed decisions, personalize customer experiences, and drive efficiencies in operations.

Collectively, these emerging technologies and innovations laid the groundwork for immense change, influencing how individuals and institutions operate in this interconnected digital age. As we delve deeper into the 21st century, the anticipated advancements will likely continue to redefine the boundaries of what is possible in computer technology.

Looking Ahead: The Future of Computing (2020 – Today)

As we venture further into the 21st century, the future of computing is poised for remarkable evolution, shaped significantly by emerging technologies that promise to redefine our understanding of what is possible. One of the most profound advancements anticipated is in the field of artificial intelligence (AI). The integration of AI across various domains, such as healthcare, finance, and education, is expected to enhance decision-making processes, automate mundane tasks, and create personalized user experiences. Moreover, the increasing sophistication of machine learning algorithms will likely contribute to the development of systems that not only learn from data but also adapt and improve in real time.

Another groundbreaking frontier is quantum computing, which holds the potential to solve complex problems much faster than traditional computers. Quantum computers utilize the principles of quantum mechanics to perform calculations at unprecedented speeds, paving the way for significant advancements in areas such as cryptography, drug discovery, and optimization problems across many industries. As research in quantum technology accelerates, we can expect to witness the emergence of practical applications that were once relegated to theoretical discussions.

The significance of cybersecurity in this increasingly interconnected world cannot be overstated. As computing power grows, so too do the threats associated with cyberattacks. Innovations in cybersecurity will be critical to protecting sensitive information and maintaining trust in digital systems. Future developments are likely to include the use of AI for threat detection and response, enhancing our capability to safeguard networks against malicious activities.

In conclusion, the landscape of computing is rapidly evolving, continuously influenced by advancements in AI, quantum technologies, and cybersecurity needs. This ongoing evolution will undoubtedly have profound implications for society, changing how we work, communicate, and interact with one another and the digital world around us. As we look ahead, it becomes clear that embracing these technologies will be essential for navigating the challenges and opportunities of the future.