Introduction to Parallel Processing

Parallel processing is a computational paradigm that involves the simultaneous execution of multiple calculations or processes. This approach has emerged as a fundamental concept in modern computer architecture, signifying a transformative shift from the traditional model of sequential processing, where tasks are executed one after another. The evolution towards parallel processing is driven by the increasing demands for enhanced performance and efficiency in various computing tasks.

The rise of complex applications, including data analysis, scientific simulations, and real-time processing, has underscored the limitations of sequential processing. In a sequential model, the CPU completes one operation before moving on to the next, resulting in bottlenecks, especially when handling large volumes of data or intricate algorithms. Parallel processing addresses these challenges by distributing tasks across multiple processing units, allowing for concurrent execution and significantly reducing processing time.

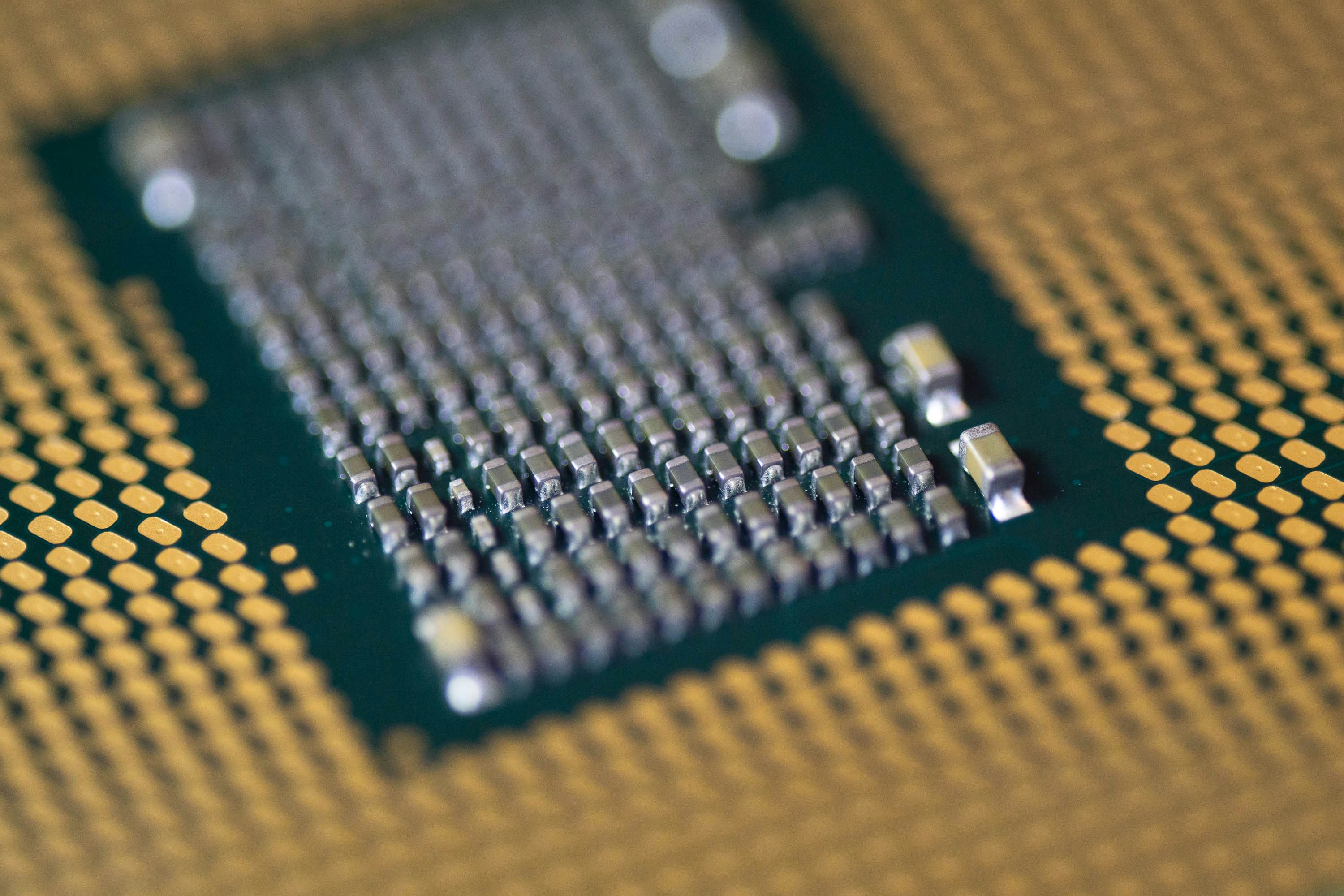

Several factors have contributed to the growing significance of parallel processing in computer architecture. The advent of multi-core and many-core processors has made it feasible to implement parallel computing on a larger scale. Additionally, the increasing volume of data in today’s digital landscape necessitates efficient processing methods to derive meaningful insights quickly. Furthermore, advances in software frameworks and programming models have simplified the development and deployment of parallel algorithms, making it accessible to a wider audience of developers and researchers.

As computing power advances, the emphasis on parallel processing continues to grow. This reflects not only a technical evolution but also a response to the diverse requirements of industries reliant on data-driven decisions and high-performance computing. Understanding the principles and applications of parallel processing is essential for harnessing its potential fully, paving the way for innovations across various fields.

The Shift from Sequential to Parallel Processing

The evolution of computer architecture has experienced a significant transformation, particularly with the transition from sequential to parallel processing. Historically, sequential processing dominated the landscape of computing, where tasks were executed one after the other in a linear fashion. While this approach was effective during the early stages of computer development, several fundamental limitations emerged, compelling hardware producers to explore parallel processing architectures.

One of the primary drawbacks of sequential processing is its tendency to create performance bottlenecks. In a sequential system, the speed of execution is largely dictated by the slowest operation in the sequence. As the complexity of applications has increased, the inefficiencies inherent in this model have become more pronounced. Programs that require extensive processing power and time struggle to achieve optimal performance, resulting in user dissatisfaction and operational inefficiencies.

Additionally, sequential processing often leads to underutilization of available resources. Modern computer architectures are designed with multiple cores and advanced processing units capable of executing numerous operations simultaneously. However, in a traditional sequential setup, only a single core typically engages in the workload at a time, preventing other powerful components from being effectively leveraged. This situation ultimately hinders overall system performance.

The shift towards parallel processing arises as a solution to these limitations. By allowing multiple tasks to be performed concurrently, parallel architectures significantly enhance computational speed and efficiency. This adaptability is especially critical in today’s data-driven landscape, where large datasets and demanding applications necessitate swift data processing capabilities. Consequently, the transition from sequential to parallel processing represents a pivotal advancement in modern computer architecture, setting the stage for improved performance and resource utilization.

Challenges of Parallel Programming

Parallel programming, while advantageous for improving the performance of software applications, presents a variety of challenges that can complicate the development process. One of the primary difficulties lies in data synchronization. When multiple threads or processes access shared data simultaneously, it is crucial to ensure that this data remains consistent. If not properly handled, different threads may read and write data in an unpredictable manner, leading to conflicts and incorrect results. Developers must implement synchronization mechanisms, such as locks, semaphores, or barriers, to control access to shared resources. However, these mechanisms can introduce overhead and limit the performance benefits of parallel execution.

Another significant challenge in parallel programming is the risk of race conditions, which occur when the outcome of a program depends on the non-deterministic ordering of events among concurrent threads. Race conditions can lead to unpredictable behavior and hard-to-track bugs, making them particularly troublesome during the debugging process. Identifying and resolving race conditions require developers to have a deep understanding of both the code and the underlying architecture, complicating the process of writing efficient parallel code.

Moreover, debugging parallel applications is inherently more complex than debugging sequential programs. Traditional debugging techniques, which often involve stepping through code line by line, can be ineffective when multiple threads are executing simultaneously. Tools designed for parallel debugging, such as thread analyzers and race condition detectors, are essential but can also come with a steep learning curve. The sheer volume of possible interactions between threads can exponentially increase the difficulty of pinpointing the source of a bug, further underscoring the complexities inherent to parallel programming.

In summary, the challenges of parallel programming, including data synchronization, race conditions, and debugging difficulties, require developers to employ specialized strategies and tools. As parallel computing continues to evolve, addressing these challenges remains a critical focus for those who seek to leverage its potential fully.

Amdahl’s Law and Performance Gains

Amdahl’s Law serves as a critical principle in the analysis of parallel processing within modern computer architecture. Formulated by Gene Amdahl in the 1960s, the law provides a foundational formula to predict the potential speedup in executing tasks when a portion of the work is parallelized. Specifically, it quantifies the performance gains that can be attained based on the fraction of a task that can be executed in parallel versus the fraction that must remain serial, or non-parallelizable.

The law is mathematically represented as follows: Speedup = 1 / (S + P/N), where S denotes the fraction of the task that is sequential, P signifies the fraction that can be parallelized, and N represents the number of processors. As the formula suggests, even as the number of processors increases, there is a ceiling to the speedup achievable due to the serial portion. A prime implication of Amdahl’s Law is that significant performance improvements are only viable when the fraction of the task that can be parallelized is substantial. Conversely, if the sequential portion is large, the overall speedup remains constrained.

This relationship highlights a critical aspect of parallel processing: diminishing returns. For instance, if a task is 95% parallelizable, using an increasing number of processors may yield substantial gains, but the remaining 5% of the task will ultimately dictate the maximum speedup. Consequently, understanding Amdahl’s Law is essential for developers and system architects, as it assists in identifying the limits of performance optimization and guides the effective allocation of resources in parallel computing environments.

In conclusion, Amdahl’s Law underscores the limitations inherent in parallel processing, emphasizing the need for careful assessment of task components to maximize efficiency in modern computer architecture.

Shared Memory Multiprocessing

Shared memory multiprocessing is a pivotal concept in modern computer architecture, allowing multiple processors to access the same memory space. This design enables a high-speed communication mechanism among processors and facilitates efficient data sharing. By having a common memory pool, processors can read and write data in a flexible and effective manner, reducing the latency associated with inter-processor communication compared to message-passing systems.

The scalability of shared memory systems is one of their significant advantages. When processing demands increase, additional processors can be added to the system, enhancing computational power while sharing access to the existing memory resources. This seamless integration leads to improved performance for parallel applications, especially in scenarios involving high levels of data access and manipulation.

However, shared memory multiprocessing is not without its challenges. One of the main issues is memory contention, where multiple processors attempt to access the same memory location simultaneously. This scenario can lead to performance degradation, as processors must wait for access to memory, thereby introducing inefficiencies. To mitigate this problem, sophisticated synchronization mechanisms are required. These mechanisms, such as locks, semaphores, and barriers, help manage concurrent access and ensure data consistency and integrity.

Another challenge associated with shared memory multiprocessing is the complexity of multi-threading. As processors execute threads simultaneously, the risk of race conditions may arise, where the final state of the shared data depends on the sequence of execution. Careful design of the processing model and synchronization protocols is essential to prevent such issues from impacting system reliability.

In essence, shared memory multiprocessing brings significant benefits for speed and efficiency in data handling but also necessitates careful management of concurrency and synchronization to harness its full potential.

Cluster Processing

Cluster processing serves as an efficient method for achieving parallelism in modern computer architectures. By utilizing clusters of interconnected computers, resources can be combined to perform complex tasks more effectively than a single machine could handle alone. In a cluster, several computers, commonly referred to as nodes, work collaboratively to process large sets of data or execute compute-intensive applications. This collaboration enables the distribution of workloads across multiple nodes, maximizing resource utilization and minimizing processing time.

Central to the functionality of cluster processing are distributed computing frameworks, which facilitate communication and resource management between nodes. These frameworks, such as Apache Hadoop, Apache Spark, and others, allow for the seamless execution of concurrent tasks by dividing the workload into smaller, manageable segments. Each node performs its designated tasks independently while maintaining synchronization with other nodes, thereby ensuring efficiency and coherence in operations. The ability to scale horizontally by adding more nodes to the cluster enhances the processing power, making it an attractive solution for organizations requiring high-performance computing capabilities.

Despite its many advantages, managing cluster systems poses certain challenges. For instance, network latency can significantly impact communication speed between nodes, leading to delays in task execution. Additionally, ensuring that workloads are evenly distributed among nodes is crucial to avoid bottlenecks, which can occur if certain nodes become overloaded while others remain underutilized. Furthermore, the complexity of maintaining a cluster can necessitate specialized skills and knowledge, which may result in higher operational costs. Nevertheless, the benefits of cluster processing, including enhanced performance and increased flexibility, generally outweigh these challenges, making it a vital technique in the realm of parallel processing in computer architecture.

Parallel Programming Techniques

Parallel processing has become a crucial aspect of modern computing, enabling the execution of multiple operations simultaneously to improve performance and efficiency. Various programming techniques are utilized in parallel processing to facilitate the development of applications that can leverage the capabilities of parallel architectures, such as multicore processors and distributed systems.

One prominent technique is message passing, which involves the exchange of messages between independent processes or threads. In this paradigm, processes communicate and synchronize their actions by sending and receiving messages, allowing them to operate concurrently without sharing a common memory space. This approach is particularly useful in distributed computing environments where different nodes operate on distinct hardware and may not have access to shared memory. Libraries such as MPI (Message Passing Interface) are commonly used to implement message passing in high-performance computing applications.

Data parallelism is another significant technique employed in parallel programming. It focuses on distributing a large dataset across multiple processing units, where the same operation is performed on different chunks of data simultaneously. This method is particularly advantageous for applications involving large-scale numerical computations, such as simulations and data analysis. Tools like OpenMP and CUDA leverage data parallelism to enhance performance in applications running on shared memory systems and GPUs, respectively.

Task parallelism complements these techniques by allowing different tasks to be performed concurrently. In this paradigm, distinct tasks, which may have varying computational workloads, can be executed in parallel. This approach improves resource utilization by efficiently distributing tasks across available processors. Frameworks such as Intel TBB (Threading Building Blocks) facilitate task parallelism by providing high-level abstractions to manage task execution and synchronization.

Overall, the implementation of these parallel programming techniques—message passing, data parallelism, and task parallelism—enables developers to write efficient code that harnesses the full potential of modern parallel architectures, achieving significant performance gains across various applications.

Real-World Applications of Parallel Processing

Parallel processing has emerged as a critical component in modern computing, enabling more efficient problem-solving across various fields. One prominent application is in scientific computing, where complex simulations and calculations are necessary for research and development. For instance, climate modeling and molecular dynamics simulations require extensive computational resources. By utilizing parallel processing, researchers can distribute these tasks across multiple processors, significantly reducing the time required to perform simulations and analyze data, leading to quicker insights into critical scientific questions.

In the realm of big data analytics, the ability to handle vast datasets has become imperative. Organizations are now tasked with processing terabytes of information to glean actionable insights. Parallel processing allows for the distribution of data across various servers, enabling simultaneous processing of multiple data streams. This not only accelerates data processing but also improves the accuracy of outcomes by providing timely information for decision-making. As companies increasingly rely on data-driven strategies, the role of parallel processing in big data analytics continues to grow, ensuring organizations remain competitive in their respective industries.

Another significant area where parallel processing has made a substantial impact is artificial intelligence (AI). Training machine learning models involves processing large volumes of data, which can be immensely time-consuming. By deploying parallel processing techniques, developers can train models faster by utilizing multiple processors to handle different tasks such as data augmentation, model evaluation, and hyperparameter tuning without significant delays. This enhancement in processing speeds facilitates rapid advancements in AI applications, from natural language processing to image recognition, thereby accelerating the pace of innovation across diverse sectors.

Overall, the integration of parallel processing principles across scientific computing, big data analytics, and artificial intelligence demonstrates its pivotal role in solving complex problems efficiently. The ability to process multiple tasks concurrently ensures that modern computing solutions can meet the escalating demands of today’s digital landscape.

Future Trends in Parallel Processing

The landscape of parallel processing is evolving rapidly, driven by advancements in technology and the increasing demand for high-performance computing. One of the most significant emerging technologies is quantum computing. Unlike classical computing, which relies on bits as the smallest unit of data, quantum computing utilizes qubits. This fundamental shift allows quantum computers to perform complex calculations at exponential speeds, offering unparalleled capabilities for parallel processing. As this technology matures, it has the potential to revolutionize sectors such as cryptography, material science, and complex system simulations.

Another promising area is neuromorphic computing, which mimics the neural architectures found in biological systems. This approach enhances parallel processing by utilizing spiking neural networks that can process information similarly to how the human brain functions. Neuromorphic chips are designed to be power-efficient and fault-tolerant, making them ideal for machine learning and artificial intelligence applications. This trend emphasizes the importance of developing hardware that corresponds with advanced software techniques, thereby pushing the boundaries of what parallel processing can achieve in real-time applications.

Furthermore, advancements in parallel programming languages and frameworks are set to significantly enhance the efficiency and accessibility of parallel processing technologies. The rise of languages that simplify the parallel programming model allows a broader range of developers to leverage parallel architectures effectively. As these languages evolve, the integration with hardware will become more seamless, enabling applications to take full advantage of multi-core and many-core processors. The continuous improvement of frameworks like OpenMP and MPI will also play a crucial role in optimizing performance and productivity in parallel programming.

Collectively, these trends present exciting opportunities for the future of parallel processing. As quantum and neuromorphic computing gain traction and programming paradigms evolve, the ultimate goal remains to facilitate more efficient computation and innovation across various industries.